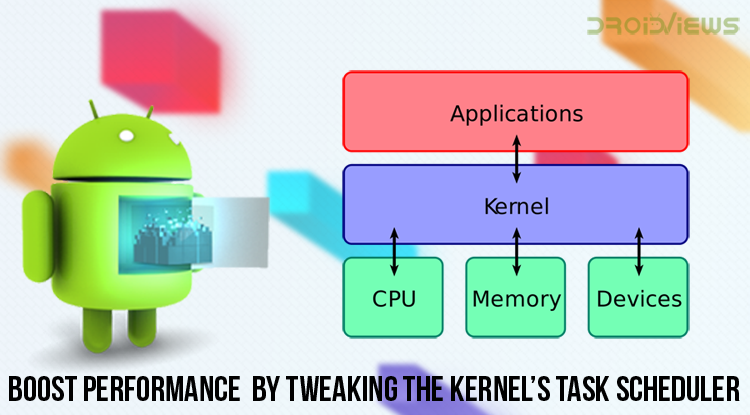

Welcome back to another kernel Task Scheduler tweaking guide for Android. In the previous article, we covered some basic Task Scheduler configuration options, which can be manually tuned to increase performance on any Android device. In case you haven’t read that article already, we strongly recommend doing so before proceeding, as it includes some useful information for better understanding how the Task Scheduler actually works and how to tweak Kernel’s Task Scheduler.

Tweak Kernel’s Task Scheduler

Apart from the basic parameters discussed in the previous article, Linux Task Scheduler implements some special features that try to improve its fair distribution of processing power. These features do not produce the same results on all systems and use-cases, so they can be enabled or disabled at runtime. They are available through the kernel debugfs. Not all kernels pack support for tweaking scheduler debugfs features though. You can learn if your kernel supports changing these features by entering the following command inside a Terminal App (as superuser):

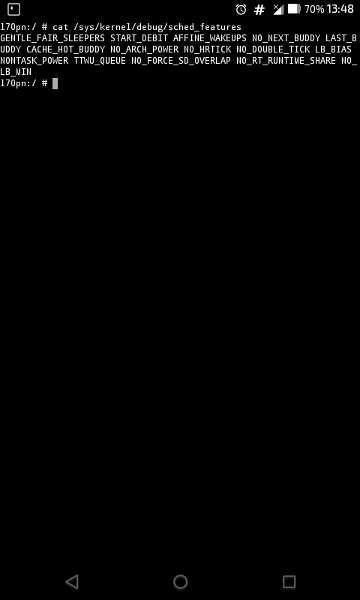

cat /sys/kernel/debug/sched_features

If you get an output like the following, then your kernel supports changing debug features at runtime:

If you get a “No such file or directory” error output, you can try to mount the kernel debugfs, in case it is available but not mounted at boot:

mount -t debugfs none /sys/kernel/debug

If this command does not produce an error, you can try passing the first command again.

Toggling A Scheduler Debug Feature

To enable a scheduler debug feature, you just need to write its name inside the sched_features file. For example:

echo GENTLE_FAIR_SLEEPERS > /sys/kernel/debug/sched_features

To disable a feature, you must also write its name inside the same file, but with a “NO_” in front. For example:

echo NO_GENTLE_FAIR_SLEEPERS > /sys/kernel/debug/sched_features

Most Common Scheduler Debug Features

It is not guaranteed that a specific kernel will support all task scheduler features discussed below. Support for features varies between kernel versions and between device implementations. All features supported are those contained in the output of command ‘cat /sys/kernel/debug/sched_features’. Features that start with a “NO_” are those supported but currently disabled.

GENTLE_FAIR_SLEEPERS

This feature tries to reduce run time of sleepers (processes that tend to sleep for a long time). As a result, more runtime is distributed to active tasks. Some users report that disabling this feature might improve responsiveness on low-end devices.

AFFINE_WAKEUPS

Put a task that wakes up on the same CPU as the task that woke it. This assumes that the new task will work on the same data in memory as the previous one. Scheduling it on the same CPU will improve cache locality(read below for more information about cache locality).

RT_RUNTIME_SHARE

We need some deeper Task Scheduling knowledge to understand this feature: On Linux, there are different task scheduling priorities. A task’s scheduling priority defines how important that task is and how much resources will get from the scheduler. Most tasks run using SCHED_OTHER (SCHED_NORMAL on newer kernels) priority. Other priorities are SCHED_BATCH, SCHED_RR, SCHED_FIFO and SCHED_DEADLINE. Last three of these priorities are real-time priorities, designed for tasks that need to run as fast as possible and as soon as they are created.

Task Scheduler assigns each CPU two special parameters: rt_runtime and rt_period. Rt_period is the scheduling period that is equivalent to 100% CPU bandwidth. Rt_runtime is the time in which each CPU runs only real-time tasks. All other tasks run for [rt_period – rt_runtime] time. Typically, the kernel Task Scheduler gives 95% of a CPU’s time to real-time tasks and 5% to all others.

RT_RUNTIME_SHARE allows a CPU to run a real-time task up to 100% of time, by borrowing rt_runtime from other CPUs. CPUs that lend rt_runtime can then run non-realtime tasks for more time. Some custom kernel developers disable this feature by default, as it can render a CPU unable to service non-realtime tasks strictly scheduled on it and decrease performance.

NEXT_BUDDY

Generally on Linux, when a task wakes up, it preempts (=takes the place of) the task that was running before on the CPU (wakeup preemption). NEXT_BUDDY handles the case when the task that wakes up does not cause preemption. When NEXT_BUDDY is enabled, the task that just woke up will run on the next scheduling event. This feature also improves cache locality.

LAST_BUDDY

When wakeup preemption succeeds, prefer to schedule next to the task that ran just before preemption. LAST_BUDDY improves cache locality.

WAKEUP_PREEMPTION

This handles wakeup preemption discussed above. Most kernels enable this behavior by default. Disabling it might help on heavy loaded systems.

Cache Memory and Cache Locality

The key point in modern CPU designs is minimizing latencies between fetching data from memory devices and processing them. RAM memory, even in its latest generations, is quite slow to operate. It usually requires hundreds of CPU cycles to get some data from an area inside RAM storage.

To overcome this situation, modern CPUs come packed with some special type of memory, known as cache memory. There are usually several cache memory devices connected in series, named L1, L2, L3 etc. Letter “L” stands for level, i.e. L1 cache is the one closest to the processor. The higher the level of cache, the slower it is to operate. On the other hand, higher level caches are less expensive to manufacture. However, all cache memories are a lot more expensive than typical RAM memory. That is why most CPUs only pack small amounts of cache memory (some MBs).

Typically, when a CPU needs to get some data from memory, it will first look in cache memories in increasing order. Every time it jumps to a higher level cache memory during the fetching process, latency increases. If data is not found inside the cache memory, CPU relies on RAM storage, which largely increases processing work.

It is up to the Operating System and Application developers to make correct use of cache memory. The Operating System should run all tasks within the same Application on CPUs that share the same cache memory, so Application data can be manipulated fast. Developers must design programs that save their data in near locations in memory. This strategy of collecting related data on the same cache areas is known as “cache locality”.

Android and Cache Locality

Android devices that ship with a number of the same type of processors in one cluster (eg. a Qualcomm msm8226 device), usually have a shared L2 cache. Each processor has its own L1 cache. These devices are easier to manage by the kernel Task Scheduler. In contrast, devices that implement the big.Little architecture (Asymmetric Multi-Processing, AMP) are more complex. They pack two clusters of processors, each one featuring its own L2 cache. The Linux Task Scheduler includes support for these types of devices, but custom manufacturer implementations need code reworks for them to operate efficiently.

Final Thoughts

All features discussed above are quite complex to understand but can have a different performance impact on different devices. For example, low-end devices might show better performance with GENTLE_FAIR_SLEEPERS and RT_RUNTIME_SHARE disabled. Enabling WAKEUP_PREEMPTION might also increase throughput on these devices.

Things are different on big.Little platforms, which feature more than one CPU clusters. Scheduling a child process or thread from a big core on a little core, or vice-versa introduces cache-memory copying latency. You can minimize this effect by enabling features that improve cache locality, AFFINE_WAKEUPS, and LAST_BUDDY.

Read next: How to Enable Floating Keyboard on Gboard

Join The Discussion: